win7+ python3.7</a> + scrapy1.5 + docker Toolbox + Splash v3.2 + scrapy-splash

说明:Splash v3.2安装在docker Toolbox虚拟容器中,其他直接安装在win7系统

一、代码

1、kandian_spider.py

#__author__ = 'Administrator'

import scrapy

import scrapy_splash

import myScrapy_1.items as Scrapyitems

class kandian(scrapy.Spider):

name = 'kandian'

start_urls = [

'http://kandian.youth.cn/index/lists?type=3'

]

def start_requests(self):

for url in self.start_urls:

yield scrapy_splash.SplashRequest(url,self.parse,args={'wait':'0.5'})

def parse(self, response):

site = scrapy.Selector(response)

it_list = []

it = Scrapyitems.Myscrapy1Item()

#获取标题

title = site.xpath('//div[@class="txt_title"]/h2/text()')

dtitle = title.extract();

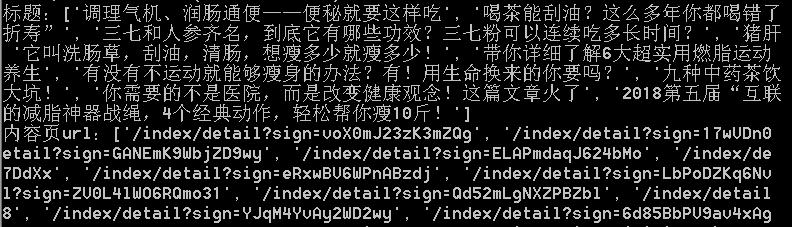

print('标题:%s'% dtitle)

#获取文章详情页url

cont_url = site.xpath('//ul[@class="news_list"]/li/a/@href');

dcont_url = cont_url.extract();

print('内容页url:%s'% dcont_url)2、item.py

import scrapy class Myscrapy1Item(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() title = scrapy.Field() cont_url = scrapy.Field()

3、settings.py

BOT_NAME = 'myScrapy_1'

SPIDER_MODULES = ['myScrapy_1.spiders']

NEWSPIDER_MODULE = 'myScrapy_1.spiders'

SPLASH_URL = 'http://192.168.99.100:8050'

ROBOTSTXT_OBEY = True

SPIDER_MIDDLEWARES = {

#'myScrapy_1.middlewares.Myscrapy1SpiderMiddleware': 543,

'scrapy_splash.SplashDeduplicateArgsMiddleware':100,

}

DOWNLOADER_MIDDLEWARES = {

#'myScrapy_1.middlewares.Myscrapy1DownloaderMiddleware': 543,

'scrapy_splash.SplashCookiesMiddleware':723,

'scrapy_splash.SplashMiddleware':725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware':810,

}二、执行流程

1、启动docker Toolbox

2、在docker Toolbox 中执行$ docker run -p 8050:8050 scrapinghub/splash 启动splash

3、wid7 cmd

4、win7 命令窗口进入项目所在目录执行scrapy crawl kandian

D:\PycharmProjects\myScrapy_1>scrapy crawl kandian

5、获取结果

转载请注明:谷谷点程序 » python3.7+scrapy1.5+docker Toolbox+Splash v3.2+scrapy-spl抓取js 动态网页简单实例